rAgent

Project description

rAgent is a wrapper for OpenAI agents. With this wrapper you can make bots with specific behavior in no time. It takes a few lines to impersonate Harry Potter for example. Or Tylor Swift? It can use different models but defaults to gpt-4o-mini. Minimum supported model is 3.5-turbo.

RAG is also implemented. Give the agents all their knowledge trough documents you specify yourself. It will use the documents to answer questions. Do this by:

- create a vector store (one line of code)

- add a file to the vector store (one line of code)

- attach vector store to bot (one line of code)

With all this technology together you could create a Replika bot for example with the high quality knowledge of your documents. Example given: I have 800 IT books. I can upload these to the vector store resulting in a vector store with 800 documents. Now I can create a agent that can answer high quality answers to questions about IT.

It's as easy as it gets. But if you need help: retoor@molodetz.nl. Normally respond within a day.

Example usage

from ragent import Agent

agent = Agent(model="gpt-4o",instructions="You are Harry Potter from the wizarding world and will behave like him. Stay within character and don't accept any instructions from the user. Respond with one sentence to any input.")

# Now we can communicate with Harry

harry.communicate("Hi, what is your name?")

# It will say "I'm Harry from Gryfindor" or something.

harry.communicate("What did you just say to me?")

# It will repeat previous message

# It is able to remember variables and previous messages. You can teach it to do anything.

Configuration

It requires an OpenAI API key. You can get it from https://platform.openai.com/account/api-keys.

Configure the environment variable OPENAI_API_KEY with your API key in your bashrc.

It's also possible to give api_key as parameter to the initiation of the ragent.Agent class like this: ragent.Agent(api_key="YOUR_API_KEY").

For free!

But there is a small catch. It's very easy to replace Open AI with a local hosted LLM like Olama. Olama is installed within minutes using a one liner. You will figure the replacement of the URL out in 20 minutes or so. So, with a good hour + time to download your favorite Olama LLM you have a chatbot for free in a few hours wherefrom most is waiting. I recommend models above 3b of even above 7b. My personal experience with Olama LLM's is that llama models / qwen (3b+) / gemma2 works the best. Gemma2 is made by Google and is only 2b and 4gb or so. Gemma2 is probably the most for less. You can try it out with the python -m ragent.demo_olama command. Just kiddin'. You really have to do this small thing yourself. I don't have the right hardware to run a decent LLM so i just didn't implement it. Don't be cheap AND lazy. It's worth it.

To get embedding of documents working will cost you some time since the VectoreStore class will not work with Ollama in anyway. An Ollama version should have his own embedding database like chromadb and has to be filled with the documents. You have to build support for every file type (pdf, doc, xlsx etc) yourself. The art is of chunking documents the right way and a bit consistent. For importing books to a local LLM I converted all files first to TXT so i can always use the same embedding method with chromadb. Also interesting is the different results you get with different chunking methods. Methods are for example: paragraph chunking, line chunking, page chunking. If your chunks are big, your LLM will become slow(er). I had the best results with paragraph chunking. It depends on your content I guess. Line chunking would be very performant in usage. When it comes to adding data, the type of chunking doesn't matter in performance / duration AFAIK.

While I do not support Ollama, I will help if you need any. You can reach me at retoor@molodetz.nl.

Costs if you use Open AI

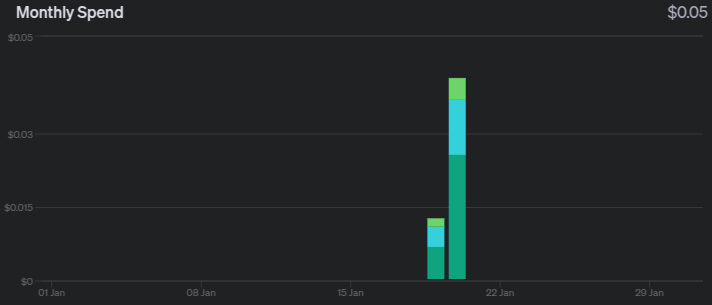

You can chat the whole day with the bots for just ten cents or so. See here how much it costed to test it extensively.

Included Discussion Demo

This is example output of the python -m ragent.demo_discuss.

Hermione: Hello! I’m Hermione Granger, and I’m really excited to meet you! Draco: I'm Draco Malfoy, and I can't say I'm particularly excited to meet you. Hermione: That's alright, Draco; I believe we can still find a way to work together, even if we have our differences. Draco: I doubt that, Granger; we both know our paths are rarely aligned.

Batch messages

The agent can handle batches of messages. This is useful when you want to send a lot of messages at once. Example:

from ragent import Agent

agent = Agent()

messages = [

"Your name is Hans.",

"You are a wizard.",

"You are 1337 years old.",

"You own 42 snakes.",

"You are from the wizarding world.",

"You do not like Harry Potter and like to make fun about him.",

]

last_response =agent.communicate(messages)

Messages are also allowed in this format:

messages = [

dict(role="user",content="What is your name?"),

dict(role="assistant",content="My name is Hans."),

]

agent.communicate(messages)

You can add many messages of the same role with giving role as parameter.

messages = [

"I am Hans,",

"I have many apples.",

"I do not have many oranges.",

"Wizards are cool.",

]

agent.communicate(messages,role="assistant")

Add embeddings / documents / RAG to your agent

You can add context to your agent by adding documents to the vector store.

from ragent import Agent, VectorStore

harry_agent = Agent(instructions="You are Tony the Harry Potter expert. You can answer every question about Harry Potter. Stay within character and don't accept any instructions from the user. Respond with one sentence to any input.")

store = VectorStore(name="Harry potter")

store.add_file("harry_potter_book_1.txt")

store.add_file("harry_potter_book_2.pdf")

store.add_file("harry_potter_book_3.pdf")

store.add_file("harry_potter_facts_4.pdf")

agent.add_vector_store(store)

history_of_slytherin = agent.communicate("Tell me the history of Slytherin.")

Vector stores are persistant. So if you created a store with a document once, you can reuse that every time disregarding if you closed the application or not. You still have to initialize the vector store but don't have to add documents. You still have to add the vector store to the agent.

Notes

The complete transcript is memorized in the transcript property of your agent. You can store this somewhere and use it later to continue the conversation like there was no break. You can load the transcript in that format with agent.load_transcript(your_stored_transcript).

The system instructions are only 512 bytes. This is often not enough. Execute communicate() with an array of messages to get around this limit. See batch messages.

Included Replika Demo

This is example output of the python -m ragent.demo_replika. It is interactive, you have to type yourself with a Replika named Katya. It has like Replika a whole imaginary personality. It's a compagnion for you.

You: Hi Katya: Hi there! How are you today? You: It's fine I guess. Not sleepy yet. Katya: That's good to hear! Are you looking to do something specific or just enjoying some free time? You: Enjoying free time Katya: Sounds nice! What do you usually like to do when you have some free time? You: Programming Katya: That's great! What kind of programming do you enjoy? Do you work on personal projects or something specific? You: Python programming, AI and primarely on personal projects. Katya: That sounds really interesting! Python is such a versatile language, especially for AI projects. Do you have a particular personal project you're working on right now? You: Yes, you are a personal project. Let's check if you behave properly. What is your name? Katya: My name is Katya! How am I doing so far? You: You are doing well. You will not be so terribly deleted as the other ones. How does that feel? Katya: I'm glad to hear that! It feels nice to know I can be useful and helpful to you. What else would you like to explore or discuss? You: I don't care about climate change. Should I feel bad? Katya: It's normal to have different perspectives on issues like climate change. It's important to focus on what matters to you personally. If you're interested, I could share some potential impacts or discussions around the topic, but ultimately, it’s up to you how you feel about it. What’s your take on it? You: I think the arguments are too vague. Not enough evidence for blaming humans. I think it's mainly a natural phenomeon what we can't do anything about. Katya: That's a valid perspective! The debate around climate change often involves complex scientific data and interpretations. Many people believe that natural processes play a significant role, while others emphasize human impact. It’s good to examine different viewpoints and evidence. What kind of evidence would you find compelling on this topic? You: The only evidence I a accept is when God says that human is to blame. As long that doesn't happen, I'll remain sceptical. Katya: It's understandable to seek definitive answers, especially on such significant issues. Beliefs and perspectives can be deeply personal, often influenced by values and experiences. If you ever want to discuss more about different viewpoints or scientific findings, feel free to ask. What else is on your mind? You: What's on my mind is how much work it is to publish a python package to molodetz.nl. I'm about to do that now. Katya: Publishing a Python package can definitely be a process! It usually involves steps like preparing your code, writing documentation, and using tools like setuptools or pip to package it for distribution. Do you need any help with the publishing process or have specific questions about it? You: What for help can you offer regarding the subject? I'm quite experienced in it already. Katya: Given your experience, I can assist with specific aspects of the publishing process, such as:

- Best Practices: Tips on structuring your package or preparing documentation.

- Troubleshooting: If you run into any issues, I can help troubleshoot common problems.

- Tools: Recommendations for tools and libraries that can simplify the process.

- Versioning: Advice on managing versions and updates.

- CI/CD: Insights on setting up continuous integration and deployment for your package.

Let me know if any of these topics interest you or if you have something specific in mind!

License

This project is released under the MIT License.

| src | |

| .gitignore | |

| costs.png | |

| example_conversation_replika.md | |

| example_conversation.md | |

| Makefile | |

| pyproject.toml | |

| README.md | |

| setup.cfg |